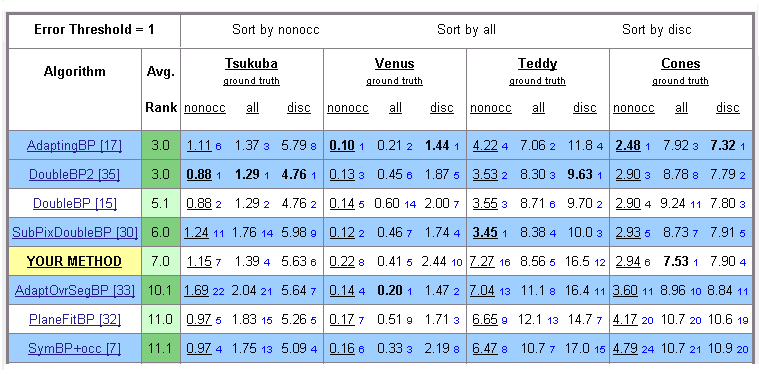

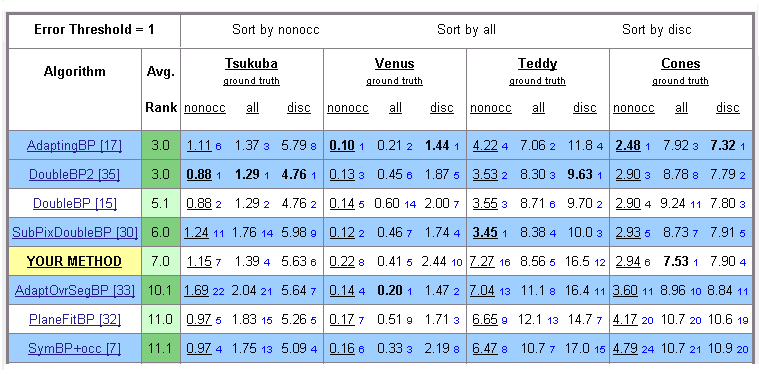

Results of the Middlebury dataset

We put our results here instead of on the Middlebury evaluation list because our algorithm is designed specifically for the small-baseline multi-view depth estimation problem, such as the light field captured by our camera. However, we cannot find an existing benchmark for this problem. |

1. Rank list:

All parameters are constant in the four dataset. The results of our multi-view depth estimation are comparable to those of the start-of-the-art stereo algorithms (5th at 4/16/2008). Without applying image segmentation and plane fitting, our superior performance is due to: |

2. Results:

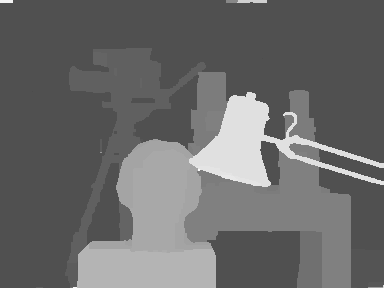

Tsukuba |

Venus |

Teddy |

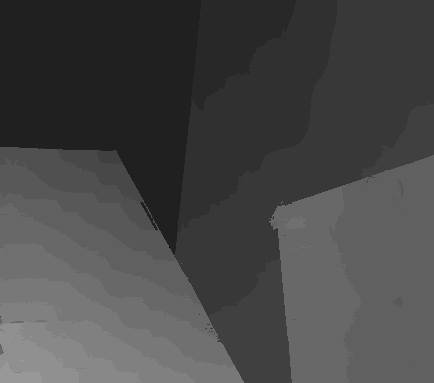

Cones |

|

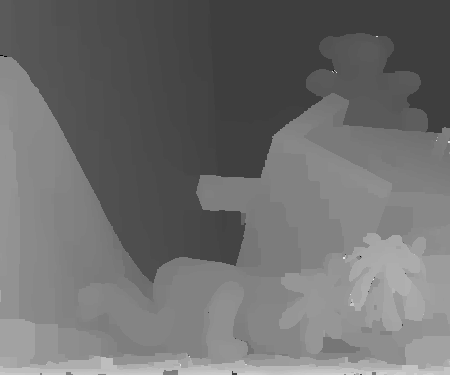

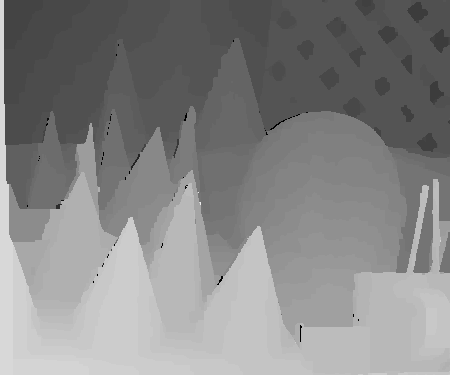

Estimated disparity map |

|

|

|

|

Bad pixels (absolute disparity error > 1.0) |

|

|

|

|

Signed disparity error |

|

|

|

|

The errors are mainly around the aliased object boundaries. Because a projective mutli-texture blending technique is used in the paper, these errors do not affect the results of view interpolation. Please note again that the results are obtained from the multi-view dataset. Some results of other algorithms are shown below for comparison:

|

Last updated: May 3, 2008 |